Join our daily and weekly newsletters for the latest updates and the exclusive content on AI coverage. Learn more

Cerebras Systems has announced today that it will welcome the Deepseek Breakthrough Artificial R1 model on American servers, promising speeds up to 57 times faster than GPU -based solutions while keeping data sensitive to the ‘interior of American borders. This decision comes in the midst of increasing concerns concerning the rapid AI progress and data confidentiality.

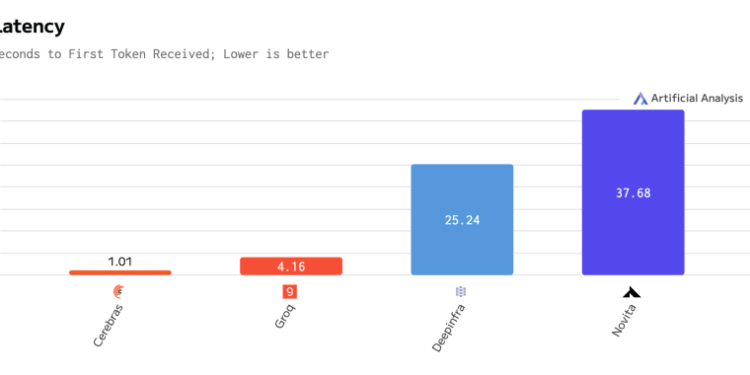

The Puce Start-up AI will deploy a version of 70 billion Deepseek-R1 parameters operating on its owner equipment on a brochure scale, offering 1,600 tokens per second-a spectacular improvement compared to traditional GPU implementations that have Fight with more recent “reasoning” models.

Why the Deepseek reasoning models reshape the company AI

“These reasoning models affect the economy,” said James Wang, a Cerebras senior manager in an exclusive interview with Venturebeat. “Any knowledge worker must mainly perform a kind of cognitive tasks in several stages. And these reasoning models will be the tools that enter their workflow. »»

The announcement follows a tumultuous week during which the emergence of Deepseek sparked the greatest loss of market value in Nvidia, nearly $ 600 billion, which raises questions about the supremacy of the AI of the AI of giant chips. The Cerebras solution responds directly to two key concerns that have emerged: the requirements for calculating advanced AI models and data sovereignty.

“If you are using the Deepseek API, which is very popular at the moment, this data is sent directly to China,” said Wang. “It is a strong warning that (fact) many American companies and companies … not willing to consider it (this one).”

How the technology of the Cerebras brochure beats traditional GPUs at AI speed

Cerebras obtains its advantage at speed thanks to a new chip architecture which retains whole AI models on a processor the size of a single brochure, eliminating the bottlenecks of memory that afflict GPU -based systems. The company claims that its implementation of Deepseek-R1 matches or exceeds the performance of OPENAI proprietary models, while fully operating on American soil.

Development represents a significant change in the AI landscape. Deepseek, founded by the former director of hedge funds, Liang Wenfeng, shocked the industry by obtaining the reasoning capacities of sophisticated AI which would have been only 1% of the cost of American competitors. Cerebras’ accommodation solution now offers American companies a way to take advantage of these advances while maintaining data control.

“It is actually a great story that American research laboratories have offered this gift to the world. The Chinese have taken it and improved it, but it has limits because it works in China, has censorship problems, and now we take them back and execute them on American data centers, without censorship, without retention of retention data, “said Wang.

American technological leadership faces new questions as IA innovation becomes global

The service will be available via an overview of the developer from today. Although it is initially free, Cerebras plans to implement API access controls due to high early demand.

This decision comes as American legislators are faced with the implications of the rise of Deepseek, which has exposed potential limits in American commercial restrictions designed to maintain technological advantages compared to China. The capacity of Chinese companies to achieve the power -pierced capacities of AI despite the chip export controls has caused calls to new regulatory approaches.

Industry analysts suggest that this development could accelerate the discrepancy of the IA infrastructure dependent on the GPU. “Nvidia is no longer the leader in inference performance,” noted Wang, pointing benchmarks showing higher performance of various specialized AI chips. “These other IA flea companies are really faster than GPUs to manage these latest models.”

The impact extends beyond technical measures. As the AI models are increasingly incorporating sophisticated reasoning capacities, their calculation requests have skyrocketed. Cerebras maintains that its architecture is better suited to these emerging workloads, potentially reshaping the competitive landscape of the deployment of corporate AI.