IIn the early hours of August 28, a quiet parking lot on a Missouri college campus became the scene of a violent rampage of vandalism. In the space of 45 minutes, 17 cars were left with broken windows, shattered mirrors, torn windshield wipers and dented chassis, causing damage worth tens of thousands of dollars.

After a month of investigation, police gathered evidence including shoe prints, witness statements and security camera footage. But it was an alleged confession to ChatGPT that ultimately led to charges against Ryan Schaefer, a 19-year-old student.

In conversations with the AI chatbot shortly after the incident, Schaefer described the carnage to the app on his phone and asked, “How fucked up am I, bro? … What if I smash several cars?”

This appears to be the first-ever case of someone incriminating themselves through technology, with police citing the “disturbing dialogue” in a report detailing the charges against Schaefer.

Less than a week later, ChatGPT was mentioned in an affidavit again, this time for a much higher-profile crime. Jonathan Rinderknecht, 29, was arrested for allegedly starting the Palisades fire, which destroyed thousands of homes and businesses and killed 12 people in California last January, after asking the artificial intelligence application to generate images of a city on fire.

These two cases are unlikely to be the last in which AI implicates people in crimes, with OpenAI boss Sam Altman saying there are no legal protections for users’ conversations with the chatbot. According to Altman, this highlights not only the privacy concerns with the emerging technology, but also the intimate information people are willing to share with it.

“People talk about the most personal shit in their lives at ChatGPT,” he said on a podcast earlier this year. “People are using it, young people in particular, as a therapist, a life coach, having these relationship issues…And right now, if you talk to a therapist or a lawyer or a doctor about these issues, you have a legal privilege.”

The versatility of AI models like ChatGPT means people can use them for everything from editing private family photos to deciphering complex documents like bank loan offers or rental agreements, all of which contain highly personal information. A recent OpenAI study found that ChatGPT users are increasingly turning to it for medical advice, shopping, and even role-playing games.

Other AI applications explicitly present themselves as virtual therapists or romantic partners, with few safeguards used by more established companies, while illicit services on the dark web allow people to treat AI not just as a confidante, but as an accomplice.

The amount of data shared is astonishing – both for law enforcement purposes and for criminals who might seek to exploit it. When Perplexity launched an AI-powered web browser earlier this year, security researchers found that hackers could hijack it to access a user’s data, which could then be used to blackmail them.

The companies controlling this technology are also looking to profit from this new trove of deeply intimate data. Starting in December, Meta will begin using people’s interactions with its AI tools to serve targeted ads across Facebook, Instagram and Threads.

Voice conversations and text exchanges with the AI will be analyzed to learn more about a user’s personal preferences and products they might be interested in. And there’s no way to get out of it.

“For example, if you chat with Metal AI about hiking, we might learn that you are interested in hiking,” Meta said in a blog post announcing the update. “As a result, you might start to see recommendations for hiking groups, posts from friends on the trail, or advertisements for hiking shoes.”

This may seem relatively innocuous, but case studies of targeted ads served through search engines and social media show just how destructive they can be. People searching for terms like “need financial help” were served ads for predatory loans, online casinos targeted problem gamblers with free spins, and elderly users were encouraged to spend their retirement savings on overpriced gold coins.

Meta CEO Mark Zuckerberg is well aware of the amount of private data that will be swept up by the new AI advertising policy. In April, it said users will be able to let Meta AI “know a lot about you and the people you care about, through our apps.” He’s also the same person who once called Facebook users “assholes” for trusting him with their data.

“Like it or not, Meta is not really in the business of connecting friends around the world. Its business model relies almost entirely on selling targeted advertising space on its platforms,” Pieter Arntz of cybersecurity firm Malwarebytes wrote shortly after Meta’s announcement.

“The industry faces major challenges around ethics and privacy. Brands and AI providers must balance personalization with transparency and user control, especially as AI tools collect and analyze sensitive behavioral data.”

As the utility of AI deepens the trade-off between privacy and convenience, our relationship with technology is once again under scrutiny. In the same way that the Cambridge Analytica scandal forced people to consider how they used social media sites like Facebook, this new trend in data collection, along with cases like Schaefer’s and Rinderknecht’s, could see privacy pushed to the top of the tech agenda.

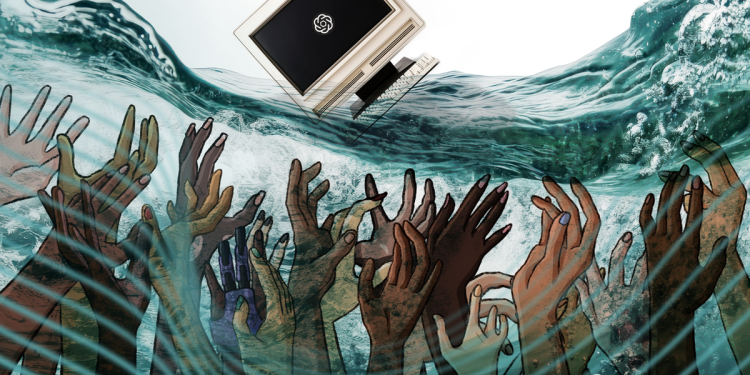

Less than three years after the launch of ChatGPT, more than a billion people are now using autonomous AI applications. These users are often unknowingly exploited by predatory tech companies, advertisers, and even criminal investigators.

There is an old adage that if you don’t pay for a service, you are not the customer, you are the product. In this new era of AI, this saying may need to be rewritten to replace the word product with prey.